A Chat(GPT) about the future of scientific publishing

Elisa L. Hill-Yardin , Mark R. Hutchinson , Robin Laycock , Sarah J. Spencer

Recently, AI in science has taken another leap forward in the release of the large language model software, ChatGPT, a publicly accessible tool developed by OpenAI (https://chat.openai.com). In an article published in February of this year (Manohar and Prasad, 2023), the authors played with the idea of having the software write part of their paper for them, and explored the implications of this. Others have fol- lowed suit, with at least one journal article listing the software as an author and then correcting that listing after a good deal of discussion in both the general media and journal offices (O’Connor, 2023a,b). With authors already adapting artwork from providers like BioRender (Tor-onto, Canada), with due cknowledgement, and relying on search en- gines like Google for decades now, is borrowing generic text from AI- generated software really any different? And what does this new AI mean for BBI and the field of psychoneuroimmunology?

Apart from disturbingly fabricated references, the text generated by the software for Manohar and Prasad’s publication (Manohar and Pra- sad, 2023) is actually pretty good. It’s a fairly accurate, logical, gram- matically correct introduction to the interaction between lupus and HIV. It is written in simple language, so has potential to sit well with the general public or non-specialists. It is uncontroversial, presenting what appears to be a mainstream consensus. And, although not shown in the paper in question, the software is quick, taking less than two minutes to generate 500 words.

Despite its pros, the generated text is largely shallow, bland, dry, and generic, lacking a distinct “voice” - rather robotic really. Unfortunately, this style may be somewhat indistinguishable from (and perhaps accu-rately mimicking) most writing in scientific articles, which is often bland, dry, formulaic, and lacking in superlatives. Perhaps a way to “beat” the software, or at least allow us to tell the difference, is to introduce more diversity in our writing styles to allow our human-ness to be more evident. This approach could also extend to more discussion of our mistakes or unsupported hypotheses, which will have other uses for the field. It is foreseeable that the AI will catch up in this regard, though. In particular, when it can scrape individual databases, such as a researcher’s publication record or email correspondence, it may be able to start injecting individuality and producing nuanced diction reflective of a specific person’s style. An interesting side note here is the question of how AI learns and whether AI may in fact be training us to write differently. Predictive text software already uses AI to suggest a word or phrase that is easier, less vulgar (i.e., not an expletive) or quicker to type than the precise message we intended. Do we accept this correction, or do we persist with the more laborious choice? Is the suggestion more apt, or merely quicker? And if the latter, is AI actually training us to use a less extensive vocabulary that will affect linguistic diversity on a population basis in the future? Using predictive text in a casual email is one thing but constraining us all into restrictive and predictable language patterns in scientific publishing is another.

As well as lacking in a writing “voice”, the text generated by ChatGPT is too shallow for our purposes as neuroscientists. The human scientist needs to have detailed knowledge of the latest relevant findings and trends, including their strengths and weaknesses and to be able to sift through a lot of noise to make conclusions. All studies are not created equal, and it’s essential to be able to interpret the relative importance and accuracy of individual works. This leads to perhaps the fundamental reason why ChatGPT should not be used uncritically in academic pub- lishing: the tool cannot be accountable for decisions or opinions put forward. To add to this, the nature of the technology is such that there is no transparency. For example, if you ask for a description of how a particular neural mechanism operates, we don’t actually know which sources were used to generate the text, or on what basis decisions about which elements to include or not include were made. Sometimes the statement will not only be out of date or not ‘best practise’ but will simply be wrong. These issues will be magnified in specialised scientific disciplines such as neuroscience that evolve very quickly, reducing the likelihood that emerging knowledge will be captured. Crucially, science is about new information and linking complex ideas together in new ways to create new innovation. This software is not capable of such high- level critical thinking yet. In its current form it might be useful in identifying convergent concepts from diverse data and literature sources and presenting this information to experienced users who could then develop testable hypotheses. The regularity with which this AI itself is trained, however, may even hold back innovation - the current forms of the technology that are publicly available were built using resources that are 5 years out of date and its training ends at 2021 meaning data published after this time are not currently incorporated (https://www. demandsage.com).

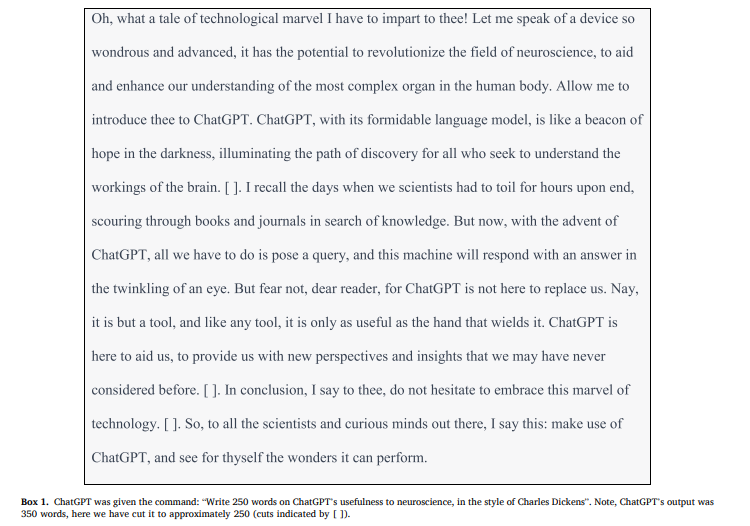

So far it seems that software like ChatGPT is a (figuratively) smart tool that will support the dissemination of relatively simple, accessible information. It is a useful tool to get us started, but, like running an immunohistochemistry experiment using an antibody of questionable specificity, without probing, integrating, and non-linear minds to assess the content, the outputs might be of little value. Science has always been a human endeavour; we should embrace new technologies rather than running scared, but not by removing the human element of science. How we deal with the next conceptual step of a critically thinking, analytical AI contributing to science in the future is worth planning for now. We might think we will all be out of a job when this technology gets here, yet if we harness this potential, it may free us to perform even higher value activities. It is not all that long ago that a single ANOVA with post hoc test was the work of an entire Ph.D project to code and execute. Today we do many of these statistical tasks within seconds. What ANOVA-like tasks will we be freed from and what biological problems might we solve by working together with this new technology? In any case, we have generated a test case arguing the usefulness of ChatGPT in neuroscience research and invite you to judge for yourselves as to whether we’re all out of a job just yet (BoX 1).

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Data availability

No data was used for the research described in the article.

Acknowledgements

The authors would like to acknowledge ChatGPT for providing the “in the style of Charles Dickens” paragraph and for hours of entertain- ment in trying to get it to mimic Yoda. Any resemblances to the actual works of Mr Dickens are due entirely to the workings of the algorithm and it was not our intention to replicate any part of Mr Dickens’ pub- lications. No artificial intelligences were harmed in the making of this work.

References

https://chat.openai.com.

https://www.demandsage.com/.

Manohar, N., Prasad, S.S., 2023. Use of ChatGPT in academic publishing: a rare case of seronegative systemic lupus erythematosus in a patient with HIV infection. Cureus 15 (2), e34616.

O’Connor, S., Corrigendum to “Open artificial intelligence platforms in nursing education: Tools for academic progress or abuse?” [Nurse Educ. Pract. 66 (2023)

103537]. Nurse Educ. Pract., 2023: p. 103572.

O’Connor, S., ChatGpt, Open artificial intelligence platforms in nursing education: Tools for academic progress or abuse? Nurse Educ. Pract., 2023. 66: p. 103537.